Planning with Sequence Models through Iterative Energy Minimization

Abstract

Recent works have shown that sequence modeling can be effectively used to train reinforcement learning (RL) policies. However, the success of applying existing sequence models to planning, in which we wish to obtain a trajectory of actions to reach some goal, is less straightforward. The typical autoregressive generation procedures of sequence models preclude sequential refinement of earlier steps, which limits the effectiveness of a predicted plan. In this paper, we suggest an approach towards integrating planning with sequence models based on the idea of iterative energy minimization, and illustrate how such a procedure leads to improved RL performance across different tasks. We train a masked language model to capture an implicit energy function over trajectories of actions, and formulate planning as finding a trajectory of actions with minimum energy. We illustrate how this procedure enables improved performance over recent approaches across BabyAI and Atari environments. We further demonstrate unique benefits of our iterative optimization procedure, involving new task generalization, test-time constraints adaptation, and the ability to compose plans together.

Method

LEAP generates plans through a iterative Gibbs sampling procedure on a trajectory energy model Eθ(τ). In each iteration, a Masked Language Model (MLM) predicts the energy of alternative actions at selected timesteps in a trajectory. A new trajectory is generated by resampling actions at each selected timestep in the trajectory, with individual actions sampled based on the predicted energy distribution from the MLM. By repeating the above steps iteratively, LEAP generates a trajectory with low energy value.

Babyai Results

Action plans are generated using an energy function defined globally across actions, enabling our LEAP model to choose each action in the entire trajectory context. Moreover, the action plan generation procedure is iterative, allowing us to leverage computational time to find better solutions towards final goals.

BabyAI Performance. We evaluate our results on four Envs as a factor of the number of iterations of planning. We find that our success rate increases with increases planning steps, with the LEAP model with Iterations=10 or 30 takes fewer steps to finish the tasks compared to planning horizon 5.

|

Failed (Plan Iterations:1). |

Succeed (Plan Iterations:5). |

Succeed (Plan Iterations:30). |

Failed (Plan Iterations:1). |

Succeed (Plan Iterations:5). |

Succeed (Plan Iterations:30). |

|

Failed (Plan Iterations:1). |

Failed (Plan Iterations:5). |

Succeed (Plan Iterations:10). |

Failed (Plan Iterations:1). |

Succeed (Plan Iterations:5). |

Succeed (Plan Iterations:10). |

Atari Results

Atari Performance in Breakout, Qbert and Pong games. We further evaluate our approach on the dynamically changing Atari games with high-dimensional visual state. Due to above features , we train and test our model without the goal state, and update the plan after each step of execution to avoid unexpected changes in the world.

Property Results

LEAP can generalize to settings in which the environment is changed at test time.

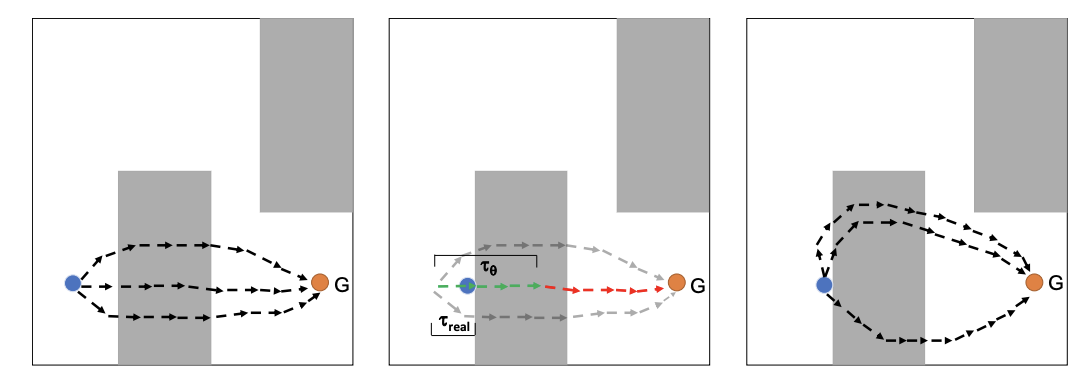

Online Adaptation: multiple lethal lava zones are added at the test time. And LEAP model is required to avoid the dangerous lava zones with constraint energy function Econstraint(τ).

Generalization: we train the LEAP model in easier environments (single-room world) but test them in more challenging environments (maze world). In these settings, as long as the learned function Eθ(τ) generalizes, and we may simply leverage more steps of sampling to recover a successful plan in this more complex environment.

|

Online Adaptation Median Env. |

Online Adaptation Boss Env. |

Generalization Train Env. |

Generalization Test Env. |